Ed Nuhfer Retired Professor of Geology and Director of Faculty Development and Director of Educational Assessment, enuhfer@earthlink.net, 208-241-5029 (with Steve Fleisher CSU-Channel Islands; Christopher Cogan, Independent Consultant; Karl Wirth, Macalester College and Eric Gaze, Bowdoin College)

We noted the statement by Zell and Krizan (2014, p. 111) that: “…it remains unclear whether people generally perceive their skills accurately or inaccurately” in Nuhfer, Cogan, Fleisher, Gaze and Wirth (2016) In our paper, we showed why innumeracy is a major barrier to the understanding of metacognitive self-assessment.

Another barrier to progress exists because scholars who attempt separately to do quantitative measures of self-assessment have no common ground from which to communicate and compare results. This occurs because there is no consensus on what constitutes “good enough” versus “woefully inadequate” metacognitive self-assessment skills. Does overestimating self-assessment skill by 5% allow labeling a person as “overconfident?” We do not believe so. We think that a reasonable range must be exceeded before those labels should be considered to apply.

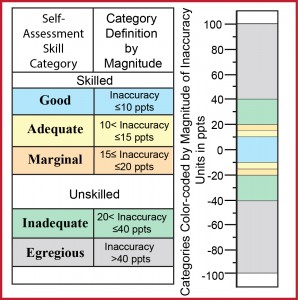

The five of us are working now on a sequel to our above Numeracy paper. In the sequel, we interpret the data taken from 1154 paired measures from a behavioral science perspective. This extends our first paper’s describing of the data through graphs and numerical analyses. Because we had a database of over a thousand participants, we decided to use it to propose the first classification scheme for metacognitive self-assessment. It defines categories based on the magnitudes of self-assessment inaccuracy (Figure 1).

Figure 1. Draft of a proposed classification scheme for metacognitive self-assessment based upon magnitudes of inaccuracy of self-assessed competence as determined by percentage points (ppts) differences between ratings of self-assessed competence and scores from testing of actual competence, both expressed in percentages.

If you wonder where the “good” definition comes from in Figure 1, we disclosed on page 19 of our Numeracy paper: “We designated self-assessment accuracies within ±10% of zero as good self-assessments. We derived this designation from 69 professors self-assessing their competence, and 74% of them achieving accuracy within ±10%.”

The other breaks that designate “Adequate,” “Marginal,” “Inadequate,” and “Egregious” admittedly derive from natural breaks based upon measures expressed in percentages. By distribution through the above categories, we found that over 2/3 of our 1154 participants had adequate self-assessment skills, a bit over 21% exhibited inadequate skills, and the remainder lay within the category of “marginal.” We found that less than 3% qualified by our definition as “unskilled and unaware of it.”

These results indicate that the popular perspectives found in web searches that portray people in general as having grossly overinflated views of their own competence may be incomplete and perhaps even erroneous. Other researchers are now discovering that the correlations between paired measures of self-assessed competence and actual competence are positive and significant. However, to establish the relationship between self-assessed competency and actual competency appears to require more care in taking the paired measures than many of us researchers earlier suspected.

Do the categories as defined in Figure 1 appear reasonable to other bloggers, or do these conflict with your observations? For instance, where would you place the boundary between “Adequate” and “Inadequate” self-assessment? How would you quantitatively define a person who is “unskilled and unaware of it?” How much should a person overestimate/underestimate before receiving the label of “overconfident” or “underconfident?”

If you have measurements and data, please compare your results with ours before you answer. Data or not, be sure to become familiar with the mathematical artifacts summarized in our January Numeracy paper (linked above) that were mistakenly taken for self-assessment measures in earlier peer-reviewed self-assessment literature.

Our fellow bloggers constitute some of the nation’s foremost thinkers on metacognition, and we value their feedback on how Figure 1 accords with their experiences as we work toward finalizing our sequel paper.