by Ed Nuhfer, California State University (Retired)

Eric Gaze, Bowdoin College

Paul Walter, St Edwards University

Simone Mcknight (Simone Erchov), Global Systems Technology

In Part 1, we summarized psychologists’ current understanding of bias. In Part 2, we connect conceptual reasoning and metacognition and show how bias challenges clear reasoning even in “objective” fields like science and math.

Science as conceptual

College catalogs’ explanations of general education (GE) requirements almost universally indicate that the desired learning outcome of the required introductory science course is to produce a conceptual understanding of the nature of science and how it operates. Focusing only on learning disciplinary content in GE courses squeezes out stakeholders’ awareness that a unifying outcome even exists.

Wherever a GE metadisciplinary requirement (for example, science) specifies a choice of a course from among the metadiscipline’s different content disciplines (for example, biology, chemistry, physics, geology), each course must communicate an understanding of the way of knowing established in the metadiscipline. That outcome is what the various content disciplines share in common. A student can then understand how different courses emphasizing different content can effectively teach the same GE outcome.

The guest editor led a team of ten investigators from four institutions and separate science disciplines (biology, chemistry, environmental science, geology, geography, and physics). Their original proposal was to investigate ways to improve the learning in the GE science courses. While articulating what they held in common as professing the metadiscipline of “science,” the investigators soon recognized that the GE courses they took as students had focused on disciplinary content but scarcely used that content to develop an understanding of science as a way of knowing. After confronting the issue of teaching with such a unifying emphasis, they later turned to the problem of assessing success in producing this different kind of understanding.

Upon discovering no suitable off-the-shelf assessment instrument to meet this need, they constructed the Science Literacy Concept Inventory (SLCI). This instrument later made possible this guest-edited series and the confirmation of knowledge surveys as valid assessments of student learning.

Concept inventories test understanding the concepts that are the supporting framework for larger overarching blocks of knowledge or thematic ways of thinking or doing. The SLCI tests nine concepts specific to science and three more related to the practice of science and connecting science’s way of knowing with contributions from other requisite GE metadisciplines.

Self-assessment’s essential role in becoming educated

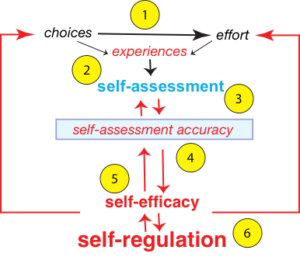

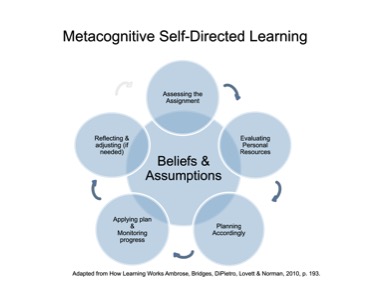

Self-assessment is partly cognitive (the knowledge one has) and partly affective (what one feels about the sufficiency of that knowledge to address a present challenge). Self-assessment accuracy confirms how well a person can align both when confronting a challenge.

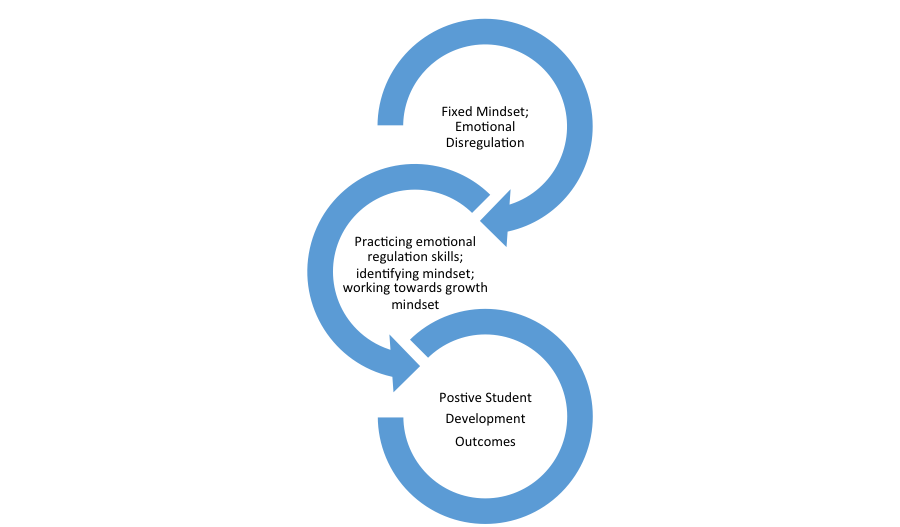

Developing good self-assessment accuracy begins with an awareness that having a deeper understanding starts to feel different from merely having surface knowledge needed to pass a multiple-choice test. The ability to accurately feel when deep learning has occurred reveals to the individual when sufficient preparation for a challenge has, in fact, been achieved. We can increase learners’ capacity for metacognition by requiring frequent self-assessments that give them the practice needed to develop self-assessment accuracy. No place needs teaching such metacognition more than the introductory GE courses.

Regarding our example of science, the 25 items on the SLCI that test understanding of the twelve concepts derive from actual cases and events in science. Their connection to bias lies in learning that when things go wrong when doing or learning science, some concept is unconsciously being ignored or violated. Violations are often traceable to bias that hijacked the ability to use available evidence.

We often say: “Metacognition is thinking about thinking.” When encountering science, we seek to teach students to “think about” (1) “What am I feeling that I want to be true and why do I have that feeling?” and (2) “When I encounter a scientific topic in popular media, can I articulate what concept of science’s way of knowing was involved in creating the knowledge addressed in the article?”

Examples of bias in physical science

“Misconceptions research” constitutes a block of science education scholarship. Schools do not teach the misconceptions. Instead, people develop preferred explanations for the physical world from conversations that mostly occur in pre-college years. One such explanation addresses why summers are warm and winters are cold. The explanation that Earth is closer to the sun in summer is common and acquired by hearing it as a child. The explanation is affectively comfortable because it is easy, with the ease coming from repeatedly using the neural network that contains the explanation to explain the seasonal temperatures we experience. We eventually come to believe that it is true. However, it is not true. It is a misconception.

When a misconception becomes ingrained in our brain neurology over many years of repeated use, we cannot easily break our habit of invoking the neural network that holds the misconception until we can bypass it by constructing a new network that holds the correct explanation. Still, the latter will not yield a network that is more comfortable to invoke until usage sufficiently ingrains it. Our bias tendency is to invoke the most ingrained explanation because doing so is easy.

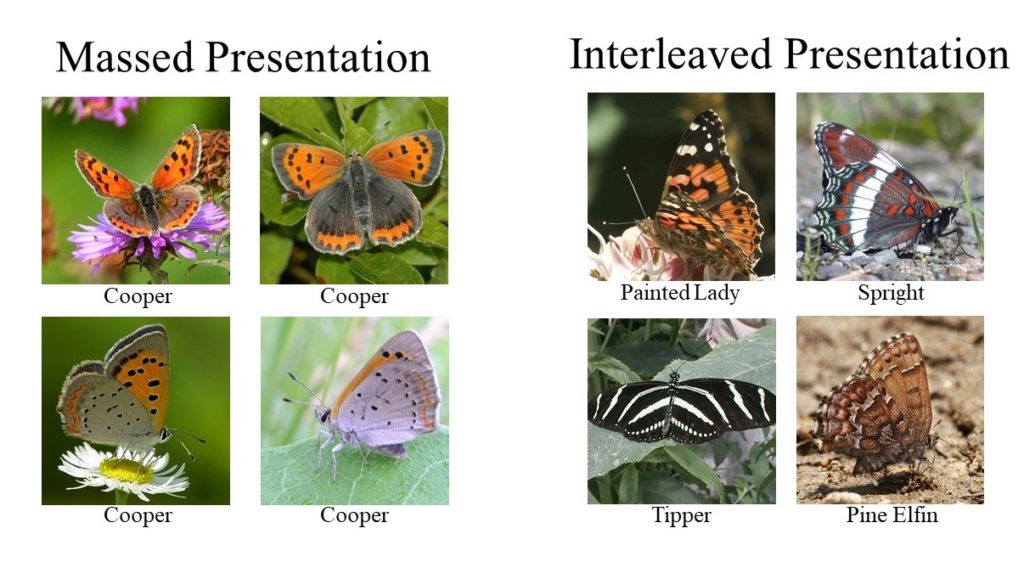

Even when individuals learn better, they often revert to invoking the older, ingrained misconception. After physicists developed the Force Concept Inventory (FCI) to assess students’ understanding of conceptual relationships about force and motion, they discovered that GE physics courses only temporarily dislodged students’ misconceptions. Many students soon reverted to invoking their previous misconceptions. The same investigators revolutionized physics education by confirming that active learning instruction better promoted overcoming misconceptions than did traditional lecturing.

The pedagogy that succeeds seemingly activates a more extensive neural network (through interactive discussing, individual and team work on problem challenges, writing, visualizing through drawing, etc.) than was activated to initially install the misconception (learning it through a brief encounter).

Biases that add wanting to believe something as true or untrue are especially difficult to dislodge. An example of the power of bias with emotional attachment comes from geoscience.

Nearly all school children in America today are familiar with the plate tectonics model, moving continents, and ephemeral ocean basins. Yet, few realize that the central ideas of plate tectonics once were scorned as “Germanic pseudoscience” in the United States. That happened because a few prominent American geoscientists so much wanted to believe their established explanations as true that their affect hijacked these experts’ ability to perceive the evidence. These geoscientists also exercised enough influence in the U. S. to keep plate tectonics out of American introductory level textbooks. American universities introduced plate tectonics in introductory GE courses only years later than did Europe.

Example of Bias in Quantitative Reasoning

People usually cite mathematics as the most dispassionate discipline and the least likely for bias to corrupt. However, researchers Dan Kahan and colleagues demonstrated that bias also disrupts peoples’ ability to use quantitative data and think clearly.

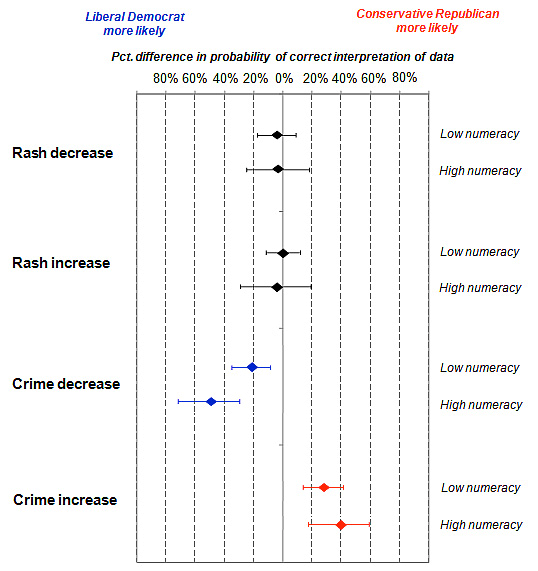

Researchers asked participants to resolve whether a skin cream effectively treated a skin rash. Participants received data for subjects who did or did not use the skin cream. Among users, the rash got better in 223 cases and got worse in 75 cases. Of subjects who did not use the skin cream, the rash got better in 107 cases and worse in 21 cases.

Participants then used the data to select from two choices: (A) People who used the cream were more likely to get better or (B) People who used the cream were more likely to get worse. More than half of the participants (59%) selected the answer not supported by the data. This query was primarily a numeracy test in deducing the meaning of numbers.

Then, using the same numbers, the researchers added affective bait. They replaced the skin cream query with a query about the effects of gun control on crime in two cities. One city allowed concealed gun carry, and another banned concealed gun carry. Participants had to decide whether the data showed that concealed carry bans increased or decreased crime.

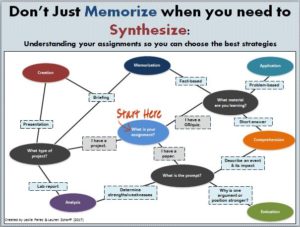

Self-identified conservative Republicans and liberal Democrats responded with a desire to believe acquired from their party affiliations. The result was even more erroneous than the skin cream case participants. Republicans greatly overestimated increased crime from gun bans, but no more than Democrats overestimated decreased crime from gun bans (Figure 1). When operating from “my-side” bias planted by either party, citizens significantly lost their ability to think critically and use numerical evidence. This was true whether the self-identified partisans had low or high numeracy skills.

Figure 1. Effect of bias on interpreting simple quantitative information (from Kahan et al. 2013, Fig. 8). Numerical data needed to answer whether a cream effectively treated a rash triggered low bias responses. When researchers employed the same data to determine whether gun control effectively changed crime, polarizing emotions triggered by partisanship significantly subverted the use of evidence toward what one wanted to believe.

Takeaway

Decisions and conclusions that appear based on solely objective data rarely are. Increasing metacognitive capacity produces awareness of the prevalence of bias.