by Gina Burkart, Ed.D., Learning Specialist, Clarke University

Pedagogy for Embedding Strategies into Classes

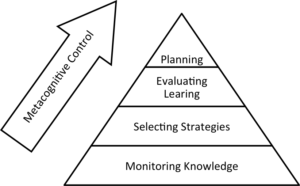

The transition to college is difficult. Students quickly discover that their old strategies from high school do not serve them well in college when they fail their first exam. As the Learning Specialist, I guide these students in modifying strategies and behaviors and in finding new strategies. This also involves helping them move away from a fixed mindset where they believe some students are just born smarter than others and move toward a growth mindset where they reflect on habits and strategies and how to set goals and make changes to achieve desired outcomes. Reflective metacognitive discussion and exercises that develop a growth mindset are necessary for this type of triaging with students (Dweck, 2006; Masters, 2013; Efklides, 2008; VanZile-Tamsen & Livingston, 1999; Livingston, 2003).

As the Learning Specialist at the University, I work with students who are struggling, and I also work with professors in developing better teaching strategies to reach students. When learning is breaking down, I have found that oftentimes the most efficient and effective method of helping students find better strategies is to collaborate with the professor and facilitate strategy workshops in the classroom tailored to the course curriculum. This allows me to work with several students in a short amount of time—while also supporting the professor by demonstrating teaching strategies he or she might integrate into future classes.

An example of a workshop that works well when learning is breaking down in the classroom is the the post-test analysis workshop. The post-test analysis workshop (see activity details below) often works well in classes after the first exam. Since most students are stressed about their test results, the metacognitive workshop de-escalates anxiety by guiding students in strategic reflection of the exam. The reflection demonstrates how to analyze the results of the exam so that they can form new habits and behaviors in attempt to learn and perform better on the next exam. The corrected exam is an effective tool for fostering metacognition because it shows the students where errors have occurred in their cognitive processing (Efklides, 2008). The activity also increases self-awareness, imperative to metacognition, as it helps students connect past actions with future goals (Vogeley, Jurthen, Falkai, & Maier, 1999). This is an important step in helping students take control of their own learning and increasing motivation (Linvingston & VanZile Tamsen, 1999; Palmer & Goetz, 1988; Pintrich & DeGroot, 1990).

Post-Test Analysis Activity

When facilitating this activity, I begin by having the professor hand back the exams. I then take the students through a serious of prompts that engage them in metacognitive analysis of their performance on the exams. Since metacognitive experiences also require an awareness of feeling (Efklides, 2008), it works well to have students begin by recalling how they felt after the exam:

- How did you feel?

- How did you think you did?

- Were your feelings and predictions accurate?

The post-test analysis then prompts the students to connect their feelings with how they prepared for the exam:

- What strategies did you use to study?

- Bloom’s Taxonomy—predicting and writing test questions from book and notes

- Group study

- Individual study

- Concept cards

- Study guides

- Created concept maps of the chapters

- Synthesized notes

- Other methods?

Students are given 1-3 minutes to reflect in journal writing upon those questions. They are then prompted to analyze where the test questions came from (book, notes, power point, lab, supplemental essay, online materials, etc.) It may be helpful to have students work collaboratively for this.

An Analysis of the Test—Where the Information Came From

- For each question identify where the test question came from:

- Book (B)

- In-class notes (C)

- Online materials (O)

- Supplemental readings (S)

- Not sure (?)

After identifying where the test information came from, students are then prompted to reflect in journal writing upon the questions they missed and how they might study differently based upon the questions they missed and where the questions came from. For example, a student may realize that he or she missed all of the questions that came from the book. That student may then make a goal to synthesizing class notes right after class with material from the book 30 minutes after class, and then use note reduction to create a concept map to study for the next test.

Another student might realize that he or she missed questions because of test-taking errors. For example, she didn’t carefully read the entire question and then chose the wrong response. To resolve this issue, she decided she would underline question words on the test and in attempt to slow down while reading test questions. She also realized that she changed several responses that she had correct. She will resist the urge to overthink her choices and change responses on the next test.

Next, students are taught about Bloom’s Taxonomy and how it is used by professors to write exams. In small groups, students then use Bloom’s Taxonomy to identify question types. This will take about 20-30 minutes—depending upon the length of the test. For example, students would identify the following test question as a comprehension-level question: Which of the following best describes positive reinforcement? Whereas, the following question would be noted as an application-level question: Amy’s parents give her a lollipop every time she successfully uses the toilet. What type of reinforcement is this?

Question Type: Identify What Level of Bloom’s Taxonomy the Test Question is Assessing

- Knowledge-level questions

- Comprehension

- Application

- Analysis

- Synthesis

- Evaluation

Students sometimes struggle with distinguishing the different levels of questions. So, it is helpful to also ask small groups to share their identified questions with the large group, as well as how they determined it to be that level of question. The professor also is a helpful resource in this discussion.

After discussion of the questions types, students then return to individual reflection, as they are asked to count the number of questions they missed for each level of Bloom’s Taxonomy. They are also asked to reflect upon what new strategies they will use to study based on this new awareness.

Adding It All Up

- Count the number of questions missed in each level of Bloom’s Taxonomy.

- Which types of questions did you miss most often?

- Compare this with your study methods.

- What adjustments might you make in your studying and learning of class material based on this information? Which levels of Bloom’s Taxonomy do you need to focus more on with your studying?

Finally, students are asked to use the class reflections and post-test assessment to create a new learning plan for the course. (See the learning plan in my previous post, Facilitating Metacognition in the Classroom: Teaching to the Needs of Your Students). Creating the Learning Plan could be a graded assignment that students are asked to do outside of class and then turn in. Students could also be referred to the Academic Resource Center on campus for additional support in formulating the Learning Plan. Additionally, a similar post-test assessment could be assigned outside of class for subsequent exams and be assigned a point value. This would allow for ongoing metacognitive reflection and self-regulated learning.

This type Cognitive Strategy Instruction (Scheid, 1993) embedded into the classroom offers students a chance to become more aware of their own cognitive processes, strategies for improving learning, and the practice of using cognitive and metacognitive processes in assessing their success (Livingston, 2003). Importantly, these types of reflective assignments move students away from a fixed mindset to a growth mindset (Dweck, 2006). As Masters (2013) pointed out “Assessment information of this kind provides starting points for teaching and learning.” Additionally, because post-test assessment offers students greater self-efficacy, control of their own learning, purpose, and an emphasis on the learning rather than the test score, it also positively affects motivation (VanZile-Tamsen & Livingston, 1999).

References

Dweck, C. S. (2006). Mindset: The new psychology of success. New York: Balantine Books.

Efklides, A. (2008). Metacognition: Defining its facets and levels of functioning in relation to self-regulation and co-regulation. European Psychologist, 13 (4), 277-287. Retrieved from https://www.researchgate.net/publication/232452693_Metacognition_Defining_Its_Facets_ad_Levels_of_Functioning_in_Relation_to_Self-Regulation_and_Co-regulation

Livingston, J. A. (2003). Metacognition: An overview. Retrieved from https://files.eric.ed.gov/fulltext/ED474273.pdf

Masters, G. N. (2013). Towards a growth mindset assessment. Retrieved from https://research.acer.edu.au/cgi/viewcontent.cgi?article=1017&context=ar_misc

Palmer, D. J., & Goetz, E. T. (1988). Selection and use of study strategies: The role of studier’s beliefs about self and strategies. In C. E. Weinstein, E. T. Goetz, & P. A. Alexander (Eds.), Learning and study strategies: Issues in assessment, instruction, and evaluation (pp. 41-61). San Diego, CA: Academic.

Palmer, D. J., & Goetz, E. T. (1988). Selection and use of study strategies: The role of studier’s beliefs about self and strategies. In C. E. Weinstein, E. T. Goetz, & P. A. Alexander (Eds.), Learning and study strategies: Issues in assessment, instruction, and evaluation (pp. 41-61). San Diego, CA: Academic.

Pintrich, P. R., & DeGroot, E. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82, 33-40

Palmer, D. J., & Goetz, E. T. (1988). Selection and use of study strategies: The role of studier’s beliefs about self and strategies. In C. E. Weinstein, E. T. Goetz, & P. A. Alexander (Eds.), Learning and study strategies: Issues in assessment, instruction, and evaluation (pp. 41-61). San Diego, CA: Academic.

Pintrich, P. R., & DeGroot, E. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82, 33-40

Pintrich, P. R., & DeGroot, E. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82, 33-40.

VanZile-Tamsen, C. & Livingston, Jennifer. J. A. (1999). The differential impact of motivation on the self regulated strategy use of high- and low-achieving college student. Journal of College Student Develompment, (40)1, 54-60. Retrieved from https://www.researchgate.net/publication/232503812_The_differential_impact_of_motivation_on_the_self-regulated_strategy_use_of_high-_and_low-achieving_college_students

Vogeley, K., Kurthen, M., Falkai, P., & Maier, W. (1999). Essential functions of the human self model are implemented in the prefrontal cortex. Consciousness and Cognition, 8, 343-363.