By Dr. Gina Burkart, Clarke University

Metacognition and First-Year Students

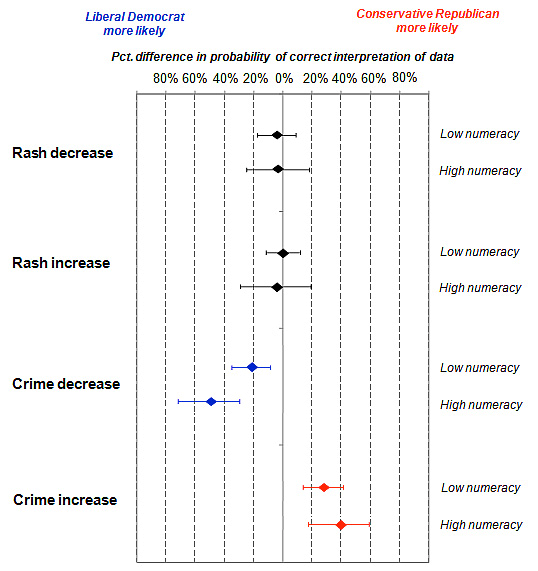

The first year of college can be a difficult transition for students, as they often lack many of the basic skills to navigate the cognitive dissonance that happens that first year. Integrating tools, strategies, and assessments into first-year writing courses that foster self-reflection also offer students the opportunity to think about their own thinking. Students are often unable to “bridge the gap” of college because they don’t have the “meta-discourse” or “meta-awareness” to engage in the writing and discussions of the university (Gennrich & Dison, 2018, pp. 4-5). Essentially, it is important for first-year students to engage in what Flavell (1979) described as metacognition—thinking about what they are doing, how they are doing it, and why they are doing it, so they can make adjustments to accurately and effectively meet the needs of the situation, purpose, and audience (Victori, 1999).

This type of rhetorical analysis builds metacognitive practices integral to the first-year writing and can guide students in discovering their own voice and learning how to use it in different academic disciplines. Within the first-year writing course, portfolio conferences are especially helpful in leading students through this type of metacognitive practice (Gencel, 2017; Alexiou & Parakeva, 2010; 2013; Farahian & Avazamani, 2018). However, course curriculum should be thoughtfully created so that students are first introduced to metacognition in the beginning of the semester and led through it repeatedly throughout the semester (Schraw, 1999).

guide students in discovering their own voice and learning how to use it in different academic disciplines. Within the first-year writing course, portfolio conferences are especially helpful in leading students through this type of metacognitive practice (Gencel, 2017; Alexiou & Parakeva, 2010; 2013; Farahian & Avazamani, 2018). However, course curriculum should be thoughtfully created so that students are first introduced to metacognition in the beginning of the semester and led through it repeatedly throughout the semester (Schraw, 1999).

Designing a First-Year Writing Course that Facilitates Metacognition

While self-reflection has always been part of the first-year writing courses I have taught, this year I introduced metacognition in the beginning of the semester and reinforced it throughout the semester as students engaged with the course theme – Motivation. I embedded my college-success curriculum 16 Weeks to College Success: The Mindful Student, Kendall Hunt into the course, which provided tools and strategies for the students to use as they learned college writing practices.

This approach was particularly helpful in a writing class because the metacognitive reflective activities reinforced writing as a tool for learning. For example, during the first-week of the semester, students discussed the syllabus in groups and created learning plans for the course using a template. The template helped them pull out essential information and think through a personalized action plan to find success within the context of the course (see Learning Plan Template, Figure 1). For example, based on the course readings: How would they read? What strategies would they use? How much time would they devote to the reading? How often would they read? What resources would they use? Students turned in their learning plans for assigned points—and I read through the plans and made comments and suggestions. Students were told that they would update their plans over the course of the semester, which they would place in their portfolio to display growth over time.

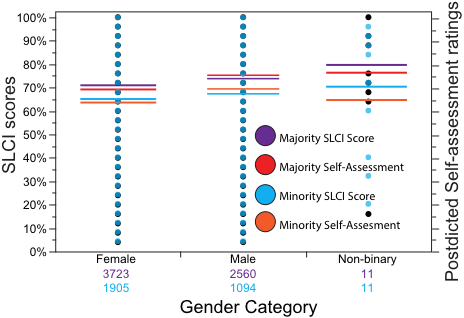

Students also took two self-assessments, set goals based on their self-assessments, and created a time management and study plan to achieve their goals. Similar to the learning plans, these activities were also turned in for points and feedback and included in their final portfolios to demonstrate growth. One asked them to self-assess on a scale of 1-5 in key skills areas that impact success: Reading, Writing, Note-Taking, Time Management, Organization, Test-Taking, Oral Communication, Studying, and Motivation. This self-assessment also included questions in metacognitive reflection about the skills and how they relate to the course. The second self-assessment (included in the 16 Weeks textbook) was the LASSI —a nationally normed self-assessment on the key indicators of college success—provided quantitative data on a scale of 1-99. The LASSI dimensions were mapped to the skill areas for success to facilitate students’ goal setting using the goal setting chart (See Goal Setting Chart, Figure 2).

Throughout the semester, students completed skill and strategy activities from the 16 Weeks textbook supporting students’ goals and helping them complete course assignments. For example, when students were assigned their first reading assignments, the strategies for critical reading were also introduced and assigned. Students received points and feedback on their use of the reading strategies applied to the course readings, and demonstrated their application of the reading strategies through a reading journal. This reading journal was included in the portfolio (see Reading Journal template, Figure 3).

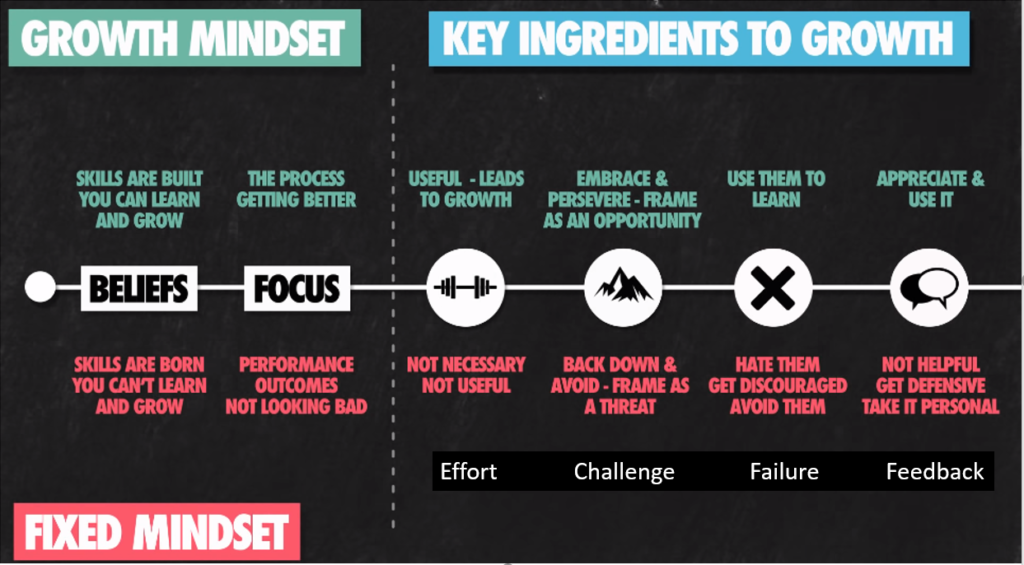

Students also were taught note-taking strategies so they could take more effective notes while watching assigned course videos on Growth Mindset, Grit and Emotional Courage. As a result, students were able to apply and learn new strategies while being exposed to key concepts related to identity, self-reflection, and metacognition. In fact, all of the course writing, speaking, and reading assignments also led the students to learn and think about topics that reinforce metacognition.

Portfolio Assessment

As portfolio assessment has been shown to positively affect metacognition and writing instruction (Farahian & Avarzmani, 2018; Alexiou & Parakva, 2010; 2013; Gencel, 2017), it seemed an appropriate culminating assessment for the course. The course curriculum built toward the portfolio throughout the semester in that the course assignments included the four steps suggested by Schaw (1998): 1) introduced and reinforced an awareness of metacognition 2) supported course learning and use of strategies 2) encouraged regulated learning 3) offered a setting that was rich with metacognition. Additionally, the course curriculum and final portfolio assessment conference included the three suggested variables of metacognitive knowledge: person, task, and strategic.

Students created a cover letter for their portfolios describing their growth in achieving the goals they set over the course of the semester. Specifically, they described new strategies, tools and resources they used and applied in the course and their other courses that helped them grow in their goal areas. The portfolio included artifacts demonstrating the application and growth. Additionally, students included their self-assessments and adjusted learning plans. Students also took the LASSI assessment as a post test in week 14 and were asked to include the pre and post-test assessments in the portfolios to compare their quantitative results and discuss growth and continued growth as part of the final conference (See Portfolio Rubric, Figure 4).

When meeting with students, students read their cover letters and talked me through their portfolios, showing me how they used and applied strategies and grew in their goal areas over the course of the semester. Part of the conversation included how they would continue to apply and/or adjust strategies, tools, and resources to continue the growth.

To reinforce metacognition and self-reflection, I had students score themselves with the portfolio and cover letter rubrics. I then scored them, and we discussed the scores. In all instances, students either scored themselves lower or the same as I scored them. They also appreciated hearing me discuss how I arrived at the scores and appreciated feedback that I had regarding their work.

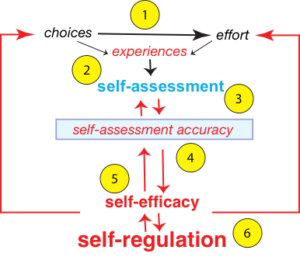

In summary, students enjoyed the portfolio conferences and shared that they wished more professors used portfolios as assessments. They also shared that they enjoyed looking at their growth and putting the portfolio together. All students expressed a deeper understanding of self and expectations of college writing, reading, and learning. They also demonstrated an understanding of strategies and tools to use moving forward and gratitude for being given tools and strategies. LASSI scores demonstrated greatest growth in the skill areas of: Anxiety, Selecting Main Ideas, Self-Testing (Fall semester); Time Management, Concentration, Information Processing/Self-Testing (Spring semester). While the results reinforce that students show different areas of growth, students in both classes demonstrated highest areas of growth in reading (Selecting Main Ideas or Information Processing). Additionally, these skill areas (Time Management, Concentration, and Self-Testing) demonstrate the ability to self-regulate—suggesting that regular reinforcement of metacognition throughout the writing course and the portfolio assignment may have had a positive effect on growth and the acquisition of metacognitive practices (Shraw, 1999; Veenman, Van Hout-Wolters, & Afflerbach, 2006).

References

Alexiou, A., & Paraskeva, F. (2010). Enhancing self-regulated learning skills through the implementation of an e-portfolio tool. Procedia Social and Behavioral Sciences, 2, 3048–3054.

Burkart, G. (2023). 16 weeks to college success: The mindful student. Dubuque, Iowa: Kendall Hunt.

Farahian M. & Avarzamanim, F.. (2018) The impact of portfolio on EFL learners’ metacognition and writing performance, Cogent Education, 5:1, 1450918, https://doi.org/10.1080/2331186X.2018.1450918

Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive developmental inquiry. American Psychologist, 34, 906–911. https://doi.org/10.1037/0003-066X.34.10.906

Gencel, I.E. (2017). The effect of portfolio assessments on metacognitive skills and on attitudes toward a course. Educational Sciences: Theory & Practice, 293-319.

Gennrich, T. & Dison, L. (2018). Voice matters: Students struggle to find voice. Reading & Writing, 9(1), 1-8.

Schraw, G. (1999). The effect of metacognitive knowledge on local and global monitoring. Contemporary Educational Psychology, 19, 143-154.

Veenman, M. V., Van Hout-Wolters, B. H., & Afflerbach, P. (2006). Metacognition and learning: Conceptual and methodological considerations. Metacognition and learning, 1, 3-14.

Victori, M. (1999). An analysis of writing knowledge in EFL composing: A case study of two effective and two less effective writers. System, 27(4), 537-555.

Weinstein, C., Palmer, D., & Acee, T. (2024). LASSI: Learning and study strategy inventory. https://www.collegelassi.com/lassi/index.html