by John Draeger (SUNY Buffalo State)

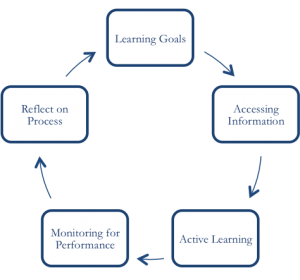

When Lauren Scharff invited me to join Improve with Metacognition last year, I was only vaguely aware of what ‘metacognition’ meant. As a philosopher, I knew about various models of critical thinking and I had some inkling that metacognition was something more than critical thought, but I could not have characterized the extra bit. In her post last week, Scharff shared a working definition of ‘metacognitive instruction’ developed by a group of us involved as co-investigators on a project (Scharff, 2015). She suggested that it is the “intentional and ongoing interaction between awareness and self-regulation.” This is better than anything I had a year ago, but I want to push the dialogue further.

I’d like to take a step back to consider the conceptual nature of metacognition by applying an approach in legal philosophy used to analyze terms with conceptual vagueness. While clarity is desirable, Jeremy Waldron argues that there are limits to the level of precision that legal discourse can achieve (Waldron, 1994). This is not an invitation to be sloppy, but rather an acknowledgement that certain legal concepts are inescapably vague. According to Waldron, a concept can be vague in at least two ways. First, particular instantiations can fall along a continuum (e.g., actions can be more or less reckless, negligent, excessive, unreasonable). Second, some concepts can be understood in terms of overlapping features. Democracies, for example, can be characterized by some combination of formal laws, informal patterns of participation, shared history, common values, and collective purpose. These features are neither individually necessary nor jointly sufficient for a full characterization of the concept. Rather, a system of government counts as democratic if it has “enough” of the features. A particular democratic system may look very different from its democratic neighbor. This is in part because particular systems will instantiate the features differently and in part because particular systems might be missing some feature altogether. Moreover, democratic systems can share features with other forms of government (e.g., formal laws, common values, and collective purpose) without there being a clear boundary between democratic and non-democratic forms of government. According to Waldron, there can be vagueness within the concept of democracy itself and in the boundaries between it and related concepts.

While some might worry that the vagueness of legal concepts is a problem for legal discourse, Waldron argues that the lack of precision is desirable because it promotes dialogue. For instance, when considering whether some particular instance of forceful policing should be considered ‘excessive,’ we must consider the conditions under which force is justified and the limits of acceptability. Answering these questions will require exploring the nature of justice, civil rights, and public safety. Dialogue is valuable, in Waldron’s view, because it brings clarity to a broad constellation of legal issues even though clarity about any one of the constituents requires thinking carefully about the other elements in the constellation.

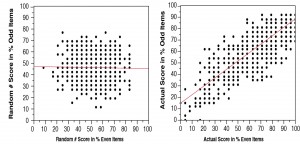

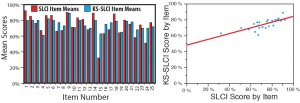

Is ‘metacognition’ vague in the ways that legal concepts can be vague? To answer this question, consider some elements in the metacognitive constellation as described by our regular Improve with Metacognition blog contributors. Self-assessment, for example, is feature of metacognition (Fleisher, 2014, Nuhfer, 2014). Note, however, that it is vague. First, self-assessments may fall along a continuum (e.g., students and instructors can be more or less accurate in their self-assessments). Second, self-assessment is composed of a variety of activities (e.g., predicting exam scores, tracking gains in performance, understanding personal weak spots and understanding one’s own level of confidence, motivation, and interest). These activities are neither individually necessary nor jointly sufficient for a full characterization of self-assessment. Rather, students or instructors are engaged in self-assessment if they engage in “enough” of these activities. Combining these two forms of vagueness, each of the overlapping features can themselves fall along a continuum (e.g., more or less accurate at tracking performance or understanding motivations). Moreover, self-assessment shares features with other related concepts such as self-testing (Taraban, Paniukov, and Kiser, 2014), mindfulness (Was, 2014), calibration (Gutierrez, 2014), and growth mindsets (Peak, 2015). All are part of the metacognitive constellation of concepts. Each of these concepts is individually vague in both senses described above and the boundaries between them are inescapably fuzzy. Turning to Scharff’s description of metacognitive instruction, all four constituent elements (i.e. ‘intentional,’ ‘ongoing interaction,’ ‘awareness,’ and ‘self-regulation’) are also vague in both senses described above. Thus, I believe that ‘metacognition’ is vague in the ways legal concepts are vague. However, if Waldron is right about the benefits of discussing and grappling with vague legal concepts (and I think he is) and if the analogy between vague concepts and the term ‘metacognition’ holds (and I think it does), then vagueness in this case should be perceived as desirable because it facilitates broad dialogue about teaching and learning.

As Improve with Metacognition celebrates its first year birthday, I want to thank all those who have contributed to the conversation so far. Despite the variety of perspectives, each contribution helps us think more carefully about what we are doing and why. The ongoing dialogue can improve our metacognitive skills and enhance our teaching and learning. As we move into our second year, I hope we can continue exploring the rich the nature of the metacognitive constellation of ideas.

References

Fleisher, Steven (2014). “Self-assessment, it’s a good thing to do.” Retrieved from https://www.improvewithmetacognition.com/self-assessment-its-a-good-thing-to-do/

Gutierrez, Antonio (2014). “Comprehension monitoring: the role of conditional knowledge.” Retrieved from https://www.improvewithmetacognition.com/comprehension-monitoring-the-role-of-conditional-knowledge/

Nuhfer, Ed (2014). “Self-Assessment and the affective quality of metacognition Part 1 of 2.”Retrieved from https://www.improvewithmetacognition.com/self-assessment-and-the-affective-quality-of-metacognition-part-1-of-2/

Peak, Charity (2015). “Linking mindset to metacognition.” Retrieved from https://www.improvewithmetacognition.com/linking-mindset-metacognition/

Scharff, Lauren (2015). “What do we mean by ‘metacognitive instruction’?” Retrieved from https://www.improvewithmetacognition.com/what-do-we-mean-by-metacognitive-instruction/

Taraban, Roman, Paniukov, Dmitrii, and Kiser, Michelle (2014). “What metacognitive skills do developmental college readers need? Retrieved from https://www.improvewithmetacognition.com/what-metacognitive-skills-do-developmental-college-readers-need/

Waldron, Jeremy (1994). “Vagueness in Law and Language: Some Philosophical Issues.” California Law Review 83(2): 509-540.

Was, Chris (2014). “Mindfulness perspective on metacognition. ”Retrieved from https://www.improvewithmetacognition.com/a-mindfulness-perspective-on-metacognition/

. But let me clarify—I do not ask rhetorical questions. As such, please respond using the comment function in IwM or Tweet your answer to the three questions in this blog.

. But let me clarify—I do not ask rhetorical questions. As such, please respond using the comment function in IwM or Tweet your answer to the three questions in this blog.