Mabel Ho, Department of Sociology, University of British Columbia

Katherine Lyon, Department of Sociology and Vantage One, UBC

Jennifer Lightfoot, Academic English Program, Vantage One, UBC

Amber Shaw, Academic English Program, Vantage One, UBC

Background and Motivation for Using Reflective Worksheets in Introductory Sociology

Research shows that for first year students in particular, lectures interspersed with active learning opportunities are more effective than either pedagogical approach on their own (Harrington & Zakrajsek, 2017). In-class reflection opportunities are a form of active learning shown to enhance cognitive engagement (Mayer, 2009), critical thinking skills (Colley et al., 2012), and immediate and long-term recall of concepts (Davis & Hult, 1997) while reducing information overload which can limit learning (Kaczmarzyk et al., 2013). Further, reflection conducted in class has been shown to be more effective than outside of class (Embo et al., 2014). Providing students with in-class activities which explicitly teach metacognitive strategies has been shown to increase motivation, autonomy, responsibility and ownership of learning (Machaal, 2015) and improve academic performance (Aghaie & Zhang, 2012; Tanner, 2012).

We created and implemented reflective worksheets (See Appendix) in multiple sections of a first-year sociology course at a large research university with a high proportion of international English as an Additional Language (EAL) students. While all first-year students must learn to navigate both the academic and disciplinary-specific language expectations of university, for many international students additional barriers may exist. For these students, new expectations must be achieved through their additional language and with possible diverse cultural assumptions, such as being unfamiliar with active learning and thought processes privileged in a Western academic institution. With both domestic and international students in mind, our aims with these reflective worksheets are to:

- facilitate and enhance students’ abilities to notice and monitor disciplinary awareness and knowledge while promoting disciplinary comprehension and practices.

- connect course material to personal experiences (micro) and social trends (macro).

Nuts and Bolts: Method

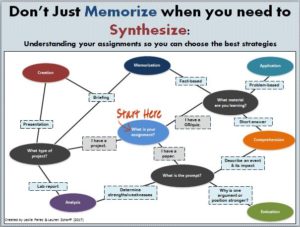

We structured individual writing reflection opportunities every 10-15 minutes in each lecture in the small (25 students), medium (50 students) and large (100 students) classes. Each lesson was one hour and students completed the worksheets during class time in five-minute segments. The worksheets had different question prompts designed to help students:

- identify affective and cognitive pre-conceptions about topics

- paraphrase or explain concepts

- construct examples of concepts just learned

- contrast terms

- describe benefits and limitations of social processes

- relate a concept to their own lives and/or cultural contexts

- discover connections between new material and prior knowledge (Muncy, 2014)

- summarize key lecture points (Davis & Hult, 1997)

- reflect on their own process of learning (see Appendix for further examples)

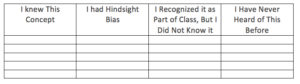

The question prompts are indicative of how to think about a topic, rather than what to think. These reflective worksheets are a way to teach students to think like disciplinary specialists in sociology, which align with the course learning outcomes. Completed worksheets were graded by Teaching Assistants (T.A.) who used the rubric below (see Table 1) to assess students’ application and critical thinking skills. By framing the worksheets as participation marks, students’ were motivated to complete the assigned work while learning how to approach sociology as a discipline. As suggested in “promoting conceptual change” (Tanner, 2012), some of the worksheets required students to recognize their preconceived notions and monitor their own learning and re-learning. For example, in one of the worksheets, students tracked their own preconceptions about a social issue (e.g. marijuana usage) in the beginning of the lecture and they returned to the same question at the end of class. Through this process, a student can have a physical record of his/her evolution of beliefs, whether it be recognizing and adjusting pre-conceived notions or deepening justifications for beliefs.

Table 1: Sample Assessment Rubric

| Sample Assessment Rubric | ||

| 3 | 2 | 1 |

| Entry is thoughtful, thorough and specific. Author draws on relevant course material where appropriate. Author demonstrates original thinking. Entries correspond to questions asked. | Entry is relevant but may be vague or generic. Author could improve the response by making it more specific, thoughtful or complete. | Entry is unclear, irrelevant, incomplete or demonstrates a lack of understanding of core concepts. |

Outcomes: Lessons Learned

We found the reflective worksheets were effective because they gave students time to think about what they were learning and, over time, increased their awareness of disciplinary construction of knowledge. As instructors, the worksheets were a useful tool in monitoring students’ learning and ‘take away’ messages from the lectures. We also utilized the worksheets as a starting point in the next lecture to clarify any misunderstandings.

Overall, we found that while the reflective worksheets seemed to be appreciated by all the students, EAL students specifically benefitted from the worksheets in a number of ways. First, the guided questions gave students additional time to think about the topic on hand and preparation time before classroom discussion. Instead of cold-calling students, this reflective time allowed students’ to gather their thoughts and think about what they just learned in an active way. Second, students were able to explore the structure of academic discourse within the discipline of sociology. As students learn through different disciplinary lenses, these worksheets reveal how a sociologist will approach a topic. In our case, international EAL students are taking courses such as psychology, academic writing, and political science. Each of these disciplines engages with a topic using a different lens and language, and having the worksheet made the approach explicit. Last, the worksheets allow students to reflect on both the content and the way language is used within sociology. For example, the worksheets gave students time to brainstorm and think about what questions are explored from a disciplinary perspective and what counts as evidence. Furthermore, when given time to reflect on the strength of disciplinary evidence, students can then determine which language features may be most appropriate to present evidence, such as whether the use of hedges (may indicate, possibly suggest, etc.) or boosters (definitely proves) would be more appropriate. When working with international EAL students, it becomes extremely important to uncover language features so students can in turn take ownership of those language features in their own language use. Looking forward, these worksheets can help guide both EAL and non-EAL students’ awareness of how knowledge is constructed in the discipline and how language can be used to reflect and show their disciplinary understanding.

References

Aghaie, R., & Zhang, L. J. (2012). Effects of explicit instruction in cognitive and metacognitive reading strategies on Iranian EFL students’ reading performance and strategy transfer. Instructional Science, 40(6), 1063-1081.

Colley, B. M., Bilics, A. R., & Lerch, C. M. (2012). Reflection: A key component to thinking critically. The Canadian Journal for the Scholarship of Teaching and Learning, 3(1). http://dx.doi.org/10.5206/cjsotl-rcacea.2012.1.2

Davis, M., & Hult, R. E. (1997). Effects of writing summaries as a generative learning activity during note taking. Teaching of Psychology, 24(1), 47-50.

Embo, M. P. C., Driessen, E., Valcke, M., & Van Der Vleuten, C. P. (2014). Scaffolding reflective learning in clinical practice: a comparison of two types of reflective activities. Medical teacher, 36(7), 602-607.

Harrington, C., & Zakrajsek, T. (2017). Dynamic Lecturing: Research-based Strategies to Enhance Lecture Effectiveness. Stylus Publishing, LLC.

Kaczmarzyk, M., Francikowski, J., Łozowski, B., Rozpędek, M., Sawczyn, T., & Sułowicz, S. (2013). The bit value of working memory. Psychology & Neuroscience, 6(3), 345-349.

Machaal, B. (2015). Could explicit training in metacognition improve learners’ autonomy and responsibility? Arab World English Journal, 6(1), 267.

Mayer, R. E. (2009). Multimedia learning (2nd ed.). New York, NY: Cambridge University Press.

Muncy, J. A. (2014). Blogging for reflection: The use of online journals to engage students in reflective learning. Marketing Education Review, 24(2), 101-114. doi:10.2753/MER1052-8008240202

Tanner, K. D. (2012). Promoting student metacognition. CBE-Life Sciences Education, 11(2), 113-120.