Why Seeing Others as “The Little Engines that Could” beats Seeing Them as “The Little Engines Who Were Unskilled and Unaware of It”

by Ed Nuhfer,Ph.D. Professor of Geology, Director of Faculty Development and Director of Educational Assessment, California State Universities (retired)

What is Self-Assessment?

At its root, self-assessment registers as an affective feeling of confidence in one’s ability to perform in the present. We can become consciously mindful of that feeling and begin to distinguish the feeling of being informed by expertise from the feeling of being uninformed. The feeling of ability to rise in the present to a challenge is generally captured by the phrase “I think I can….” Studies indicate that we can improve our metacognitive self-assessment skill with practice.

Quantifying Self-Assessment Skill

Measuring self-assessment accuracy assessment lies in quantifying the difference between a felt competence to perform and a measure of the actual competence demonstrated. However, what at first glance appears to be a calculation of simple subtraction has proven to be a nightmarish challenge to a researcher’s efforts in presenting data clearly and interpreting it accurately. I speak of this “nightmare” with personal familiarity. Some colleagues and I recently summarized different kinds of self-assessments, self-assessment’s relationship to self-efficacy, the importance of self-assessment to achievement, and the complexity of interpreting self-assessment measurements (Nuhfer and others, 2016; 2017).

Can we or can’t we do it?

The children’s story, The Little Engine that Could is a well-known story of the power of positive self-assessment. The throbbing “I think I can, I think I can…” and the success that follows offers an uplifting view of humanity’s ability to succeed. That view is close to the traits of the “Growth Mindset” of Stanford Psychologist Carol Dweck (2016). It is certainly more uplifting than an alternative title, The Little Engine that Was Unskilled and Unaware of It,” which predicts a disappointing ending to “I think I can I think I can….” The dismal idea that our possible competence is capped by what nature conferred at birth is a close analog to the traits of Dweck’s “Fixed Mindset,” which her research revealed as toxic to intellectual development.

As writers of several Improve with Metacognition blog entries have noted, “Unskilled and Unaware of It” are key words from the title of a seminal research paper (Kruger & Dunning, 1999) that offered one of the earliest credible attempts to quantify the accuracy of metacognitive self-assessment. That paper noted that some people were extremely unskilled and unaware of it. Less than a decade later, psychologists were claiming: “People are typically overly optimistic when evaluating the quality of their performance on social and intellectual tasks” (Ehrlinger and others, 2008). Today, laypersons cite the “Dunning-Kruger Effect” and often use it to label any individual or group that they dislike as “unskilled and unaware of it.” We saw the label being applied wholesale in the 2016 presidential election, not just to the candidates but also to the candidates’ supporters.

Self-assessment and vulnerability

Because self-assessment is about taking stock of ourselves rather than judging others, using the Dunning-Kruger Effect to label others is already on shaky ground. But are the odds that those we are tempted to label as “unskilled and unaware of it” likely to be correct? While the consensus in the literature of psychology seems to indicate that they are, our investigation of the numeracy underlying the consensus indicates otherwise (Nuhfer and others, 2017).

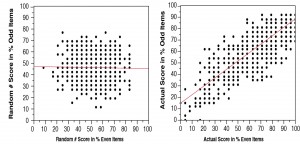

We think that nearly two decades of replicated studies that concluded that people are “…typically overly optimistic…” exhibited replication because they all relied on variants of a unique graphic introduced in the seminal paper in 1999. These graphs generate artifact patterns from both actual data and random numbers that are patterns expected from a Dunning-Kruger Effect, and the artifacts are easily mistaken for expressions of actual human self-assessment traits.

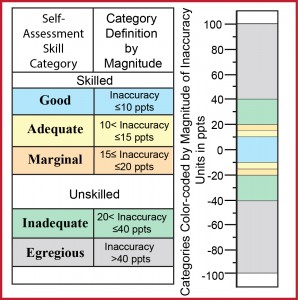

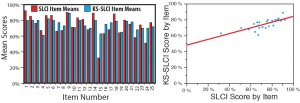

After gaining some understanding of the hazards presented by the devilish nature of self-assessment measures, our quantitative results showed that people, in general, have a surprisingly good awareness of their capabilities (Nuhfer and others, 2016, 2017). About half of our studied populace of over a thousand students and faculty accurately self-assessed their performance within ± 10 percentage points (ppts), and about two-thirds of people proved accurate within ±15 ppts. About 25% might qualify as having inadequate self-assessment skills (greater than ± 20 ppts), but only about 5% of our academic populace might merit the label “unskilled and unaware of it” (overestimated their abilities by 30 ppts or more). Odds seem high against a randomly selected person being seriously “unskilled and unaware of it” and are very high against this label being validly applicable to a group.

Others often rise to the expectations we have of them.

Consider the collective effects of people’s accepting beliefs about themselves and others as “unskilled and unaware of it.” This negative perspective can predispose an organization to accept, as a given, that people are less capable than they really are. Further, for those of us with power, such as instructors over students or tenured peers over untenured instructors, we should become aware of a term called “gaslighting.” In gaslighting, our negatively biased actions or comments may result in taking away the self-confidence of others who accept us as credible, trustworthy, and important to their lives. This type of influence can lead to lower performance, thus seeming to substantiate the initial negative perspective. When gaslighting is deliberate, it constitutes a form of emotional abuse.

Aren’t you CURIOUS yet?

Wondering about your self-assessment skills and how they compare with those of novices and experts? Give yourself about 45 minutes and try the self-assessment instrument used in our research at <http://tinyurl.com/metacogselfassess>. You will receive a confidential report if you furnish your email at the end of completing that self-assessment.

Several of us, including our blog founder Lauren Scharff, will be presenting the findings and implications of our recent numeracy studies in August, at the Annual Meeting of the American Psychological Association in Washington DC. We hope some of our fellow bloggers will be able to join us there.

References

Dweck, C. (2016). Mindset: The New Psychology of Success. New York: Ballantine.

Ehrlinger J., Johnson, K., Banner M., Dunning, D., and Kruger, J. (2008). Why the unskilled are unaware: Further explorations of absent self-insight among the incompetent. Organizational Behavior and Human Decision Processes 105: 98–121. http://dx.doi.org/10.1016/j.obhdp.2007.05.002.

Kruger, J. and Dunning, D. (1999). Unskilled and unaware of it: How difficulties in recognizing one‘s own incompetence lead to inflated self-assessments. Journal of Personality and Social Psychology 77: 1121‒ 1134. http://dx.doi.org/10.1037/0022- 3514.77.6.1121

Nuhfer, E. B., Cogan, C., Fleisher, S., Gaze, E., and Wirth, K., (2016). Random number simulations reveal how random noise affects the measurements and graphical portrayals of self-assessed competency.” Numeracy 9 (1): Article 4. http://dx.doi.org/10.5038/1936-4660.9.1.4

Nuhfer, E. B., Cogan, C., Fleisher, S., Wirth, K. and Gaze, E., (2017), “How random noise and a graphical convention subverted behavioral scientists’ explanations of self-assessment data: Numeracy underlies better alternatives. Numeracy: 10 : (1): Article 4.