by Ed Nuhfer, California State Universities (retired)

Karl Wirth, Macalester College

Christopher Cogan, Memorial University

McKensie Kay Phillips, University of Wyoming

Matthew Rowe, University of Oklahoma

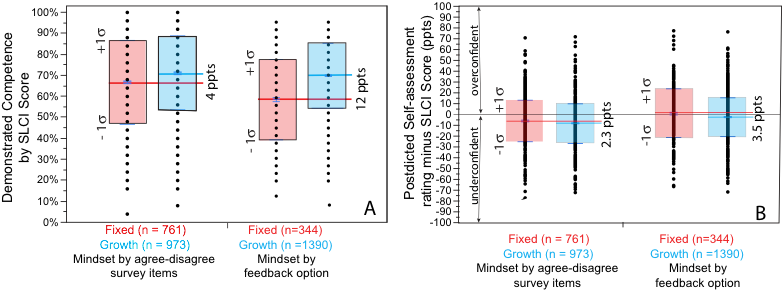

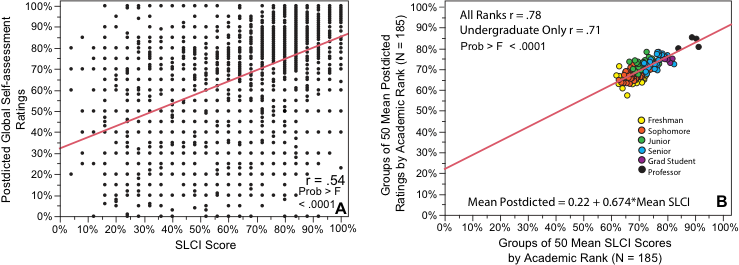

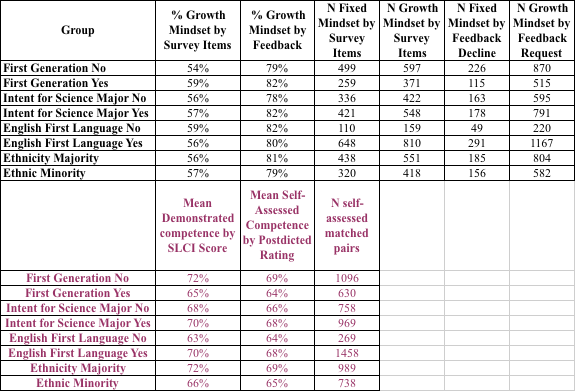

Early adopters of knowledge surveys (KSs) recognized the dual benefits of the instrument to support and assess student learning produced by a course or program. Here, we focus on a third benefit: developing students’ metacognitive awareness through self-assessment accuracy.

Communicating self-assessed competence

Initially, we just authored test and quiz questions as the KS items. After the importance of the affective domain became more accepted, we began stressing affect’s role in learning and self-assessment by writing each knowledge survey item with an overt affective self-assessment root such as “I can…” or “I am able to…” followed by a cognitive content outcome challenge. When explaining the knowledge survey to students, we focus their attention on the importance of these affective roots for when they rate their self-assessed competence and write their own items later.

We retain the original three-item response scale expressing relative competence as no competence, partial competence, and high competence. Research reveals three-item scales as valid and reliable as longer ones, but our attraction to the shorter scale remains because it promotes addressing KS items well. Once participants comprehend the meaning of the three items and realize that the choices are identical for every item, they can focus on each item and rate their authentic feeling about meeting the cognitive challenge without distraction by more complex response choices.

We find the most crucial illumination for a student’s self-assessment dilemma: “How do I know when I can rate that I can do this well?” is “When I know that I can teach how to meet this challenge to another person.”

Backward design

We favor backward design to construct topical sections within a knowledge survey by starting with the primary concept students must master when finally understanding that topic. Then, we work backward to build successive items that support that understanding by constantly considering, “What do students need to know to address the item above?” and filling in the detail needed. Sometimes we do this down to the definitions of terms needed to address the preceding items.

Such building of more detail and structure than we sensed might be necessary, especially for introductory level undergraduates, is not “handing out the test questions in advance.” Instead, this KS structure uses examples to show that deceptively disconnected observations and facts allow understanding of the unifying meaning of “concept” through reaching to make connections. Conceptual thinking enables transferability and creativity when habits of mind develop that dare to attempt to make “outrageous connections.”

The feeling of knowing and awareness of metadisciplinary learning

Students learn that convergent challenges that demand right versus wrong answers feel different from divergent challenges that require reasonable versus unreasonable responses. Consider learning “What is the composition of pyrite?” and “Calculate the area of a triangle of 50 meters in length and a base of 10 meters?” Then, contrast the feeling required to learn, “What is a concept?” or “What is science?”

The “What is science?” query is especially poignant. Teaching specialty content in units of courses and the courses’ accompanying college textbooks essentially bypass teaching the significant metadisciplinary ways of knowing of science, humanities, social science, technology, arts, and numeracy. Instructors like Matt Rowe design courses to overcome the bypassing and strive to focus on this crucial conceptual understanding (see video section at times 25.01 – 29.05).

Knowledge surveys written to overtly provoke metadisciplinary awareness aid in designing and delivering such courses. For example, ten metadisciplinary KS items for a 300-item general geology KS appeared at its start, two of which follow.

- I can describe the basic methods of science (methods of repeated experimentation, historical science, and modeling) and provide one example each of its application in geological science.

- I can provide two examples of testable hypotheses statements, and one example of an untestable hypothesis.

Students learned that they would develop the understanding needed to address the ten throughout the course. The presence of the items in the KS ensured that the instructor did not forget to support that understanding. For ideas about varied metadisciplinary outcomes, examine this poster.

Illuminating temporal qualities

Because knowledge surveys establish baseline data and collect detailed information through an entire course or program, they are practical tools from which students and instructors can gain an understanding of qualities they seldom consider. Temporal qualities include magnitudes (How great?), rates (How quickly?), duration (How long?), order (What sequence?), frequency (How often?), and patterns (What kind?).

More specifically, knowledge surveys reveal magnitude (How great were changes in learning?), rates (How quickly we cover material relative to how well we learned it?), duration (How long was needed to gain an understanding of specific content?), order (What learning should precede other learning?), and patterns (Does all understanding come slowly and gradually or does some come in time as punctuated “Aha moments?”).

Knowledge survey patterns reveal how easily we underestimate the effort needed to do the teaching that makes significant learning change. A typical pattern from item-by-item arrays of pre-post knowledge surveys reveals a high correlation. Instructors may find it challenging to produce the changes where troughs of pre-course knowledge surveys revealing areas of lowest confidence become peak areas in post-course knowledge surveys showing high confidence. Success requires attention to frequency (repetition with take-home drills), duration (extending assignments addressing difficult contents with more time), order (giving attention to optimizing sequences of learning material), and likely switching to more active learning modalities, including students authoring their own drills, quizzes, and KS items.

Studies in progress by author McKensie Phillips showed that students were more confident with the material at the end of the semester rather than each individual unit. This observation even held for early units where researchers expected confidence would decrease given the time elapsed between the end of the unit and when the student took the post-semester KS. The results indicate that certain knowledge mastery is cumulative, and students are intertwining material from unit to unit and practicing metacognition by re-engaging with the KS to deepen understanding over time.

Student-authored knowledge surveys

Introducing students to the KS authoring must start with a class knowledge survey authored by the instructor so that they have an example and disclosure of the kinds of thinking utilized to construct a KS. Author Chris Cogan routinely tasks teams of 4-5 students to summarize the content at the end of the hour (or week) by writing their own survey items for the content. Typically, this requires about 10 minutes at the end of class. The instructor compiles the student drafts, looks for potential misconceptions, and posts the edited summary version back to the class.

Beginners’ student-authored items often tend to be brief, too vague to answer, or too focused on the lowest Bloom levels. However, feedback from the instructor each week has an impact, and students become more able to write helpful survey items and – more importantly – better acquire knowledge from the class sessions. The authoring of items begins to improve thinking, self-assessment, and justified confidence.

Recalibrating for self-assessment accuracy

Students with large miscalibrations in self-assessment accuracy should wonder, “What can I do about this?” The pre-exam knowledge survey data enables some sophisticated post-exam reflection through exam wrappers (Lovett, 2013). With the responses to their pre-exam knowledge survey and the graded exam in hand, students can do a “deep dive” into the two artifacts to understand what they can do.

Instructors can coach students to gain awareness of what their KS responses indicate about their mastery of the content. If large discrepancies between the responses to the knowledge survey and the graded exam exist, instructors query for some introspection on how these arose. Did students use their KS results to inform their actions (e.g., additional study) before the exam? Did different topics or sections of the exam produce different degrees of miscalibration? Were there discrepancies in self-assessed accuracy by Bloom levels?

Most importantly, after conducting the exam wrapper analysis, students with significant miscalibration errors should each articulate doing one thing differently to improve performance. Reminding students to revisit their post-exam analysis well before the next exam is helpful. IwM editor Lauren Scharff noted that her knowledge surveys and tests reveal that most psychology students gradually improved their self-assessment accuracy across the semester and more consistently used them as an ongoing learning tool rather than just a last-minute knowledge check.

Takeaways

We construct and use surveys differently than when we began two decades ago. For readers, we provide a downloadable example of a contemporary knowledge survey that covers this guest-edited blog series and an active Google® Forms online version.

We have learned that mentoring for metacognition can measurably increase students’ self-assessment accuracy as it supports growing their knowledge, skills, and capacity for higher-order thinking. Knowledge surveys offer a powerful tool for instructors who aim to direct students toward understanding the meaning of becoming educated, becoming learning experts, and understanding themselves through metacognitive self-assessment. There remains much to learn.